Everything You Need to Know About Apple’s Personal Voice Feature

Updated: Jan. 25, 2024

Apple recently previewed some new accessibility features, one of which can replicate your own voice. Here's what you need to know about Personal Voice and when you can expect to see it.

Apple devices are well-known for being very user-friendly, which certainly lends to the enduring mass appeal of iPhones, iPads and MacBooks. This goes beyond simple and intuitive controls and slick software, however. Gadgets like the iPhone also feature many accessibility settings that make these devices easier to use. These accessibility features range from conveniences like keyboard shortcuts and other handy iPhone tricks to functions aimed at assisting people with disabilities or other impairments.

Apple recently announced some new and more advanced accessibility features, including one which uses machine learning to replicate your voice. The feature is called Personal Voice and will help people with limited speaking abilities use a synthesized voice for communication. Here’s everything we know about Personal Voice, including when you can expect to see it, and some details about the other new accessibility features Apple is bringing to its devices this year.

What is Personal Voice and how does it work?

Personal Voice is part of the new on-device accessibility technologies Apple is rolling out this year. It’s designed to work with Live Speech, another of the new accessibility features Apple recently previewed. Live Speech allows users to type something and have the iPhone, iPad or MacBook read it aloud. It can be used during FaceTime calls and in-person communications, making it a useful tool for the speech-impaired.

The new Personal Voice feature enhances this experience by allowing you to customize Live Speech with a synthesized version of your voice. Personal Voice uses machine learning to recreate the sound of a person’s voice for use during Live Speech, allowing speech-impaired users to connect with friends and family in a personalized way.

How to enable Personal Voice

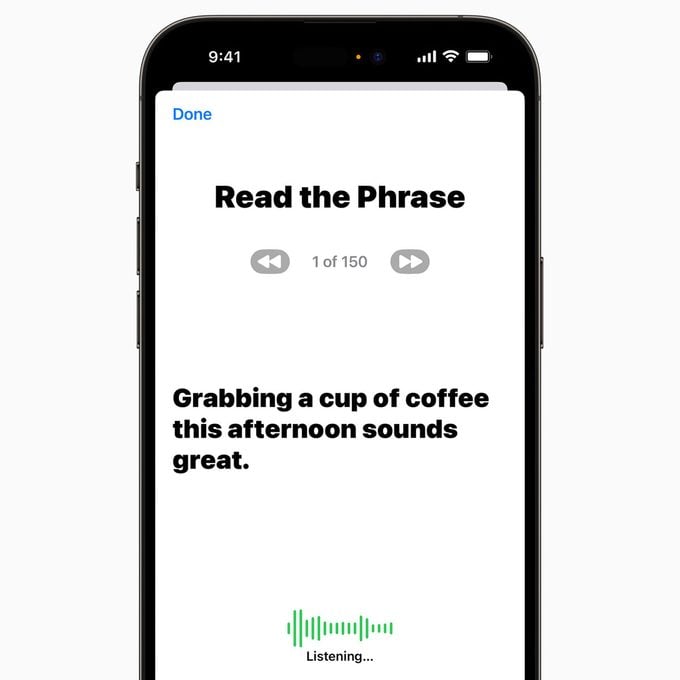

The catch with Personal Voice is that it requires a vocal sample of the user to recreate the person’s voice. Unfortunately, this cannot be done with a pre-recorded voice sample; it requires the device owner to read along with a prompt for 15 minutes. This means that those unable to speak cannot set up Personal Voice. However, they will still be able to use the Live Speech text-to-voice function.

We don’t yet have detailed information on how to enable Live Speech and Personal Voice, but Apple gave us a preview of how users can set it up. Once these features arrive on Apple devices later this year, they will most likely be found in the Settings app under “Accessibility.” There, you should be able to enable and set up Live Speech and Personal Voice for use on your iPhone or iPad.

Other new accessibility features from Apple

Apple announced other new accessibility features coming to their devices along with Live Speech and Personal Voice sometime this year. Here are two new tools users can look forward to, as well as some changes to existing accessibility features:

- Assistive Access: This feature simplifies popular apps and combines them into a single, intuitive, easy-to-use interface. Assistive Access allows the user to synchronize Phone, Messages, FaceTime, Camera, Photos and Music into a single Calls app. This combined interface also utilizes high-contrast icons and large, easy-to-read text, offering a heavily streamlined user experience for individuals who may prefer a less complicated communication interface.

- Point and Speak: This is a new function built into the Magnifier app. It makes it easier for those with impaired vision to use certain objects with text, such as buttons. Point and Speak can use input from the camera and LiDAR scanner on an iPhone or iPad to “read” text labels aloud as the user interacts with an object (for example, the keypad controls on a kitchen appliance). Point and Speak will also be used with other Magnifier functions, such as Door Detection and Image Descriptions, allowing those with vision disabilities to interface more easily with their environment using audio cues.

- Made for iPhone hearing devices: Hearing aid devices compatible with iPhones and iPads will soon be compatible with Mac computers.

- Voice Control: Users who use voice-to-text will be automatically presented with phonetic suggestions for words that sound alike, such as “there” and “their,” so it’s easier to choose the correct term when communicating.

- Pause moving images: Users can set their devices to automatically pause moving images, such as GIFs, in Messages and Safari. This is useful for those who may be sensitive to rapid animations.

Source:

- Apple: “Apple introduces new features for cognitive accessibility, along with Live Speech, Personal Voice, and Point and Speak in Magnifier”